Chatty's World CS 175: Project in AI (in Minecraft)

Chatty’s World

Project Summary

The goal of our project is to implement a Natural Language Processing (NLP) system in Minecraft that will accept and interpret user input, and have the Minecraft agent act accordingly. To understand varying user input, we will make use of language processing tools, such as the NLTK and Gensim libraries. The agent actions will be implemented through the use of Microsoft’s Malmo API. Not all inputs can be handled, as there are an infinite number of possible inputs that a user can give, as well as limitations on the actions a Minecraft agent can actually perform. We will start by implementing basic commands (moving, turning, attacking, etc.), then use these, along with world state observations, to build more intricate commands, including (but not limited to) locating entities, killing mobs, fishing, and finding shelter.

Approach

Our project is divided into two main parts: (1) the NLP and (2) creating Malmo agent actions.

(1) Natural Language Processing We are using the NLTK and Gensim libraries in order to interpret user input, so that we can make the correct action call. Using the NLTK functions, we are simply tokenizing and performing Part-of Speech tagging upon user input, so that we can grab the important verbs and nouns, which associate with commands and parameters respectfully. For example, if a user types “find a pig”, we will take the words “find” and “pig” in order to call function “find(pig)”. Here, we would link the word “find” to call the find function, and pass the corresponding noun as a parameter.

The Gensim library will help us with NLP through the use of word vectors, so that each word that we examine from the user input will have its own unique vector resprestation. We will build our word2vector model using Google’s GoogleNews-vectors-negative300.bin file. With this, if we encounter user inputs that include verbs/nouns that our NLP system does not recognize such as “locate boar”, we will compare the word vectors of “locate” and “boar” with terms that we already know, and link these unknown words to the known words with the highest cosine similarities (if the cosine similarities match a certain threshold). This should successfully match “locate” with “find” and “boar” with “pig”, so that “find(pig)” can be called again.

(2) Malmo Agent Actions To create actions for our agent to perform, we make use of Malmo’s InventoryCommands and movement commands (AbsoluteMovementCommands, ContinouousMovementCommands), as well as Malmo’s ObservationFromRay and ObservationFromNearbyEntities. Malmo initially provides several commands for simple movement, and we use these to create even more commands. Receiving the Malmo world state observations is extremely crucial to the success of our created commands, as they observing the world is necessary to complete tasks such as finding and/or killing mobs. In order to find a pig, we must check for the pig entities within our observation, grab their coordinates, and compare them with our agent’s coordinates. Here, we use Euclidian Distance to see which pig is the closest, then use movement commands to walk to the location, until the pig is observed to be in our LineOfSight and in range (for striking). For a kill-function, our agent must continously call upon the find-function to locate the pig/mob, and strike until the agent notices that the pig/mob is no longer in the world state observations. All of our commands (aside from those provided by Malmo) will use existing commands, and rely on gathering observations from the Malmo world.

Evaluation

Evaluating our project is a slighly difficult because the success or failure of our language processing is discrete, as are (for the most part) the new find and kill functions that we created for our Malmo agent to perform. Thus, the best way to see the progress of our project is within our video demo, where you can observe two things (1) Our agent succeeding in “finding” and “killing” animals/mobs and (2) Gensim allowing functions to be called with different wording.

To measure the success of our action functions in a more quantitative way, we ran our project several times, each time calling our functions at random times and with random paramaters (parameters = type of entity to act upon). We called both the find and kill function 100 times each, and recorded whether our agent succeeded in performing the given task. For the find function, we determine a success as locating the correct entity and standing directly in front of it, so that it is in the agent’s crosshairs. This was observed 77/100 times within our tests. The 33 failures are not as bad as they sound, because in these cases, the agent successfully located and moved to the correct entity–the entity was just slighly to the right or left of the crosshairs, either as a result of the entity moving or the entity standing in an awkward position/angle. The success of the kill function is determined by the death of the correct entity. Because the kill function calls upon the find function, the results were very similar. We saw a success rate of 71/100, with the remaining failures being a result of the same crosshair problem, since it is necessary for an animal/mob to be within the crosshairs to attack it.

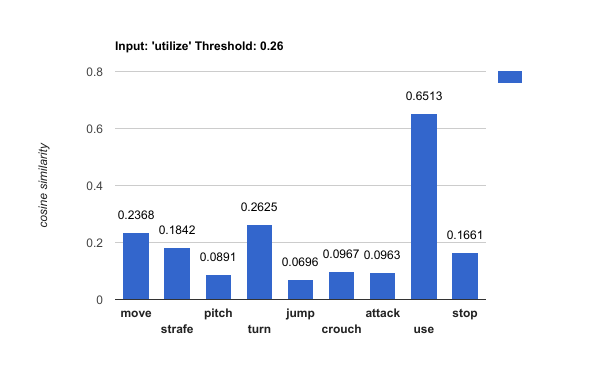

The next part of our evaluation is in our word pairing. The graph below demonstrates how we use the cosine similarity to find the closest matched Malmo command. The example below takes the input “utilize”, which is paired to the “use” command in Malmo as it holds the highest cosine similarity. The second image is a screenshot of how we tested the cosine similarities within our code using the word “hop.” We also included a threshold for the cosine similarity. The threshold filters out the words that did not have a high enough cosine similarity with the valid Malmo commands. For example, given a threshold of 0.26, the input of “fry egg,” would return “Could not find a close match.” because no valid command would have a high enough cosine similarity with the word “fry”. At this current stage, we set our threshold to be at 0.26. From there, we return the command paired with the highest cosine similarity greater than the threshold.

Remaining Goals and Challenges

Within the upcoming weeks, our main goals include buliding upon our NLP handler, improving our current agent actions (find, kill), and creating new actions for our agent to perform. As of right now, our NLP handler can only interpret simple, one-action commands, such as “turn left”, “find the pig”, and “kill a cow”. We want to improve upon this by successfully interpreting compound sentences (2 or more actions/subjects), whether it be a sentence like “find the pig, then kill it” or “find and kill the pig”. These two sentences have the same meaning and should produce the same result, but will have to be handled differenly using NLTK.

While our current functions for finding and killing items/mobs are successful, we would like to make them more efficient by changing our agent’s walking path. As mentioned in our approach, we use the Euclidian distance to find entities closest to us; however, when we move to find the given entity, our agent takes the Manhattan distance route, where it travels the x-coordinate, followed by the z-coordinate. We are hoping to have our agent follow the diagonal, much more efficient Euclidian path. To write other new actions for our agent, we will need creativity and more research on what is and is not possible in Malmo. A few ideas that we have thought of and looked into are riding a horse, feeding animals, finding shelter, and going fishing. We look further into implementing these in the upcoming weeks, and thinking of more possibilities.

Given our experience thus far, I feel that our biggest challenge has been figuring out exactly what is possible with Malmo, especially since we are working on a project that presents endless possibilies. Although the API provides many tools that have been very useful, there has been a lot of trial and error that has come along with figuring these tools out. There has also been buggy errors within some of these tools such as ObservationFromRay, which should detect entities within our LineOfSight, but occasionally does not recognize an animal directly in our agent’s crosshairs. By working on the project and reading the Malmo documentation, we have figured out a lot about what we are able to do, and can use our gained knowledge to move forward more effectively–it all comes down to reading and practice. We will also need to look much deeper into the NLTK documentation to figure out techniques to interpret complex user commands. By figuring out the power of Malmo, we will be able to create better and more agent actions.